ZETIC.MLange LLM Model#

Overview#

ZETIC.MLange LLM Model provides an abstraction layer for LLM (Large Language Model) implementations using ZETIC.ai’s infrastructure. It offers a developer-friendly interface for downloading and running LLM models on mobile devices, managing model downloads.

Model Support#

Current tested models include:

DeepSeek-R1-Distill-Qwen-1.5B-F16

TinyLlama-1.1B-Chat-v1.0

Model compatibility depends on device capacity.

[Performance and Latency section to be added]

Core Concept#

Backend Abstraction#

Supports multiple LLM backends including LLaMA.cpp

Handles model initialization and runtime management

Provides unified interface across different backend implementations

API Reference#

Initialization#

init(personalKey: String, modelKey: String, target: LLMTarget, quantType: LLMQuantType)select exact backend target and quant type

init(personalKey: String, modelKey: String, modelMode: LLMModelMode, dataSetType: LLMDataSetType)Downloads device-appropriate model using prepared personal key and model key

Initializes LLM model with proper backend

For more information about mode select, please follow LLM Inference Mode Select page.

Conversation#

run(prompt: String)Starts conversation with provided prompt

waitForNextToken(): StringReturns next generated token, empty string indicates completion

Implement ZETIC.LLM.Model to your project#

Prerequisites#

[Model key generation section to be added]

We prepared a model key for the demo app:

deepseek-r1-distill-qwen-1.5b-f16. You can use the model key to try the ZETIC.LLM.MLange Application.Android app

For the detailed application setup, please follow

deploy to Android StudiopageZETIC.LLM.MLange usage in

Kotlinval model = ZeticMLangeLLMModel(context, tokenKey, modelKey, LLMModelMode.RUN_DEFAULT) model.run("prompt") while (true) { val token = model.waitForNextToken() if (token == "") break // add token to your chat bubble text of the ai agent }

iOS app

For the detailed application setup, please follow

deploy to XCodepageZETIC.LLM.MLange usage in

Swiftlet model = ZeticMLangeLLMModel(tokenKey, modelKey, .RUN_DEFAULT) model.run("prompt") while true { let token = model.waitForNextToken() if token == "" { break } // add token to your chat bubble text of the ai agent }

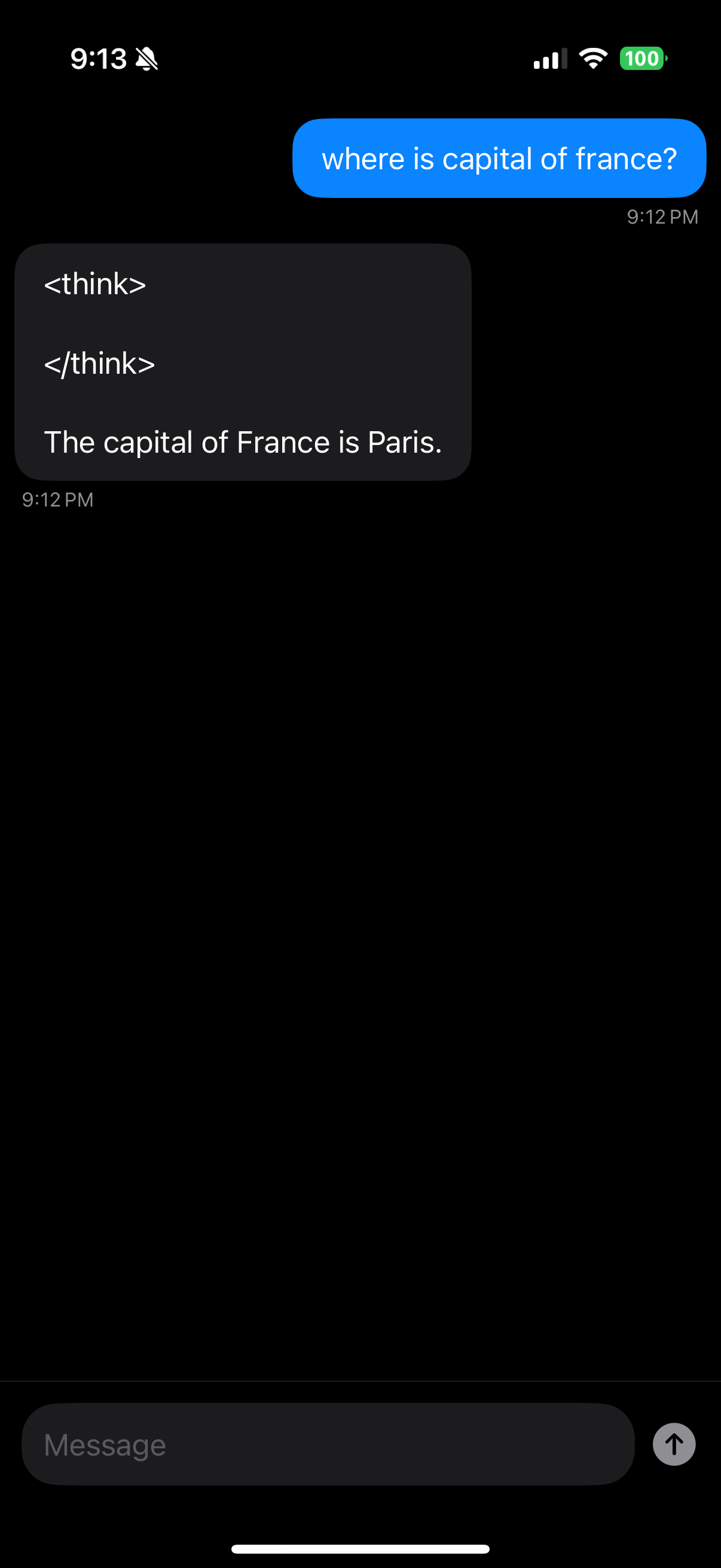

Screenshots#