Melange API

The fastest route to high-performance NPU acceleration On-devices

We enable True On-Device AI by making NPU acceleration accessible and effortless:

Fully Automated NPU Utilization

No manual driver management. We handle the hardware abstraction.

Unified End-to-End Workflow

From model training to on-device deployment in one pipeline.

Heterogeneous Hardware Orchestration

Unified support for heterogeneous NPUs across all Android and iOS devices.

Rapid Deployment

Ship production-ready AI in hours, not months.

Naming Convention

We use the name Melange for the product.

However, you will see MLange used in the source code and library (e.g., ZeticMLangeModel).

Quick Start

Generate Model Key and Personal Key

Go to Melange Dashboard to generate your keys.

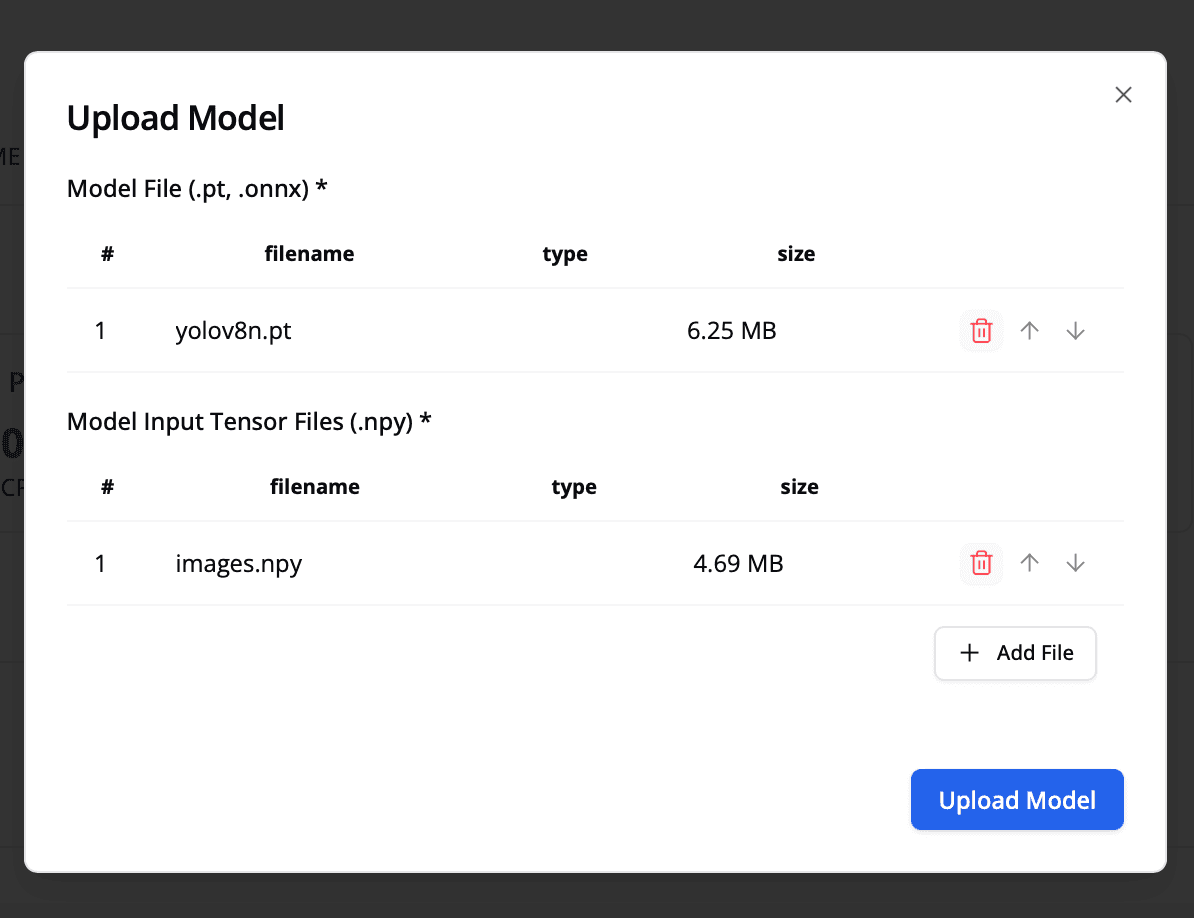

1. Upload & Auto-Compilation

Simply upload your model file to our dashboard. Melange automatically analyzes, quantizes, and compiles the graph for heterogeneous NPU targets in the background.

- Supporting model format:

- Pytorch Exported Program(

.pt2) - Onnx Model(

.onnx) - Torchscript Model (

.pt) (To Be Deprecated)

- Pytorch Exported Program(

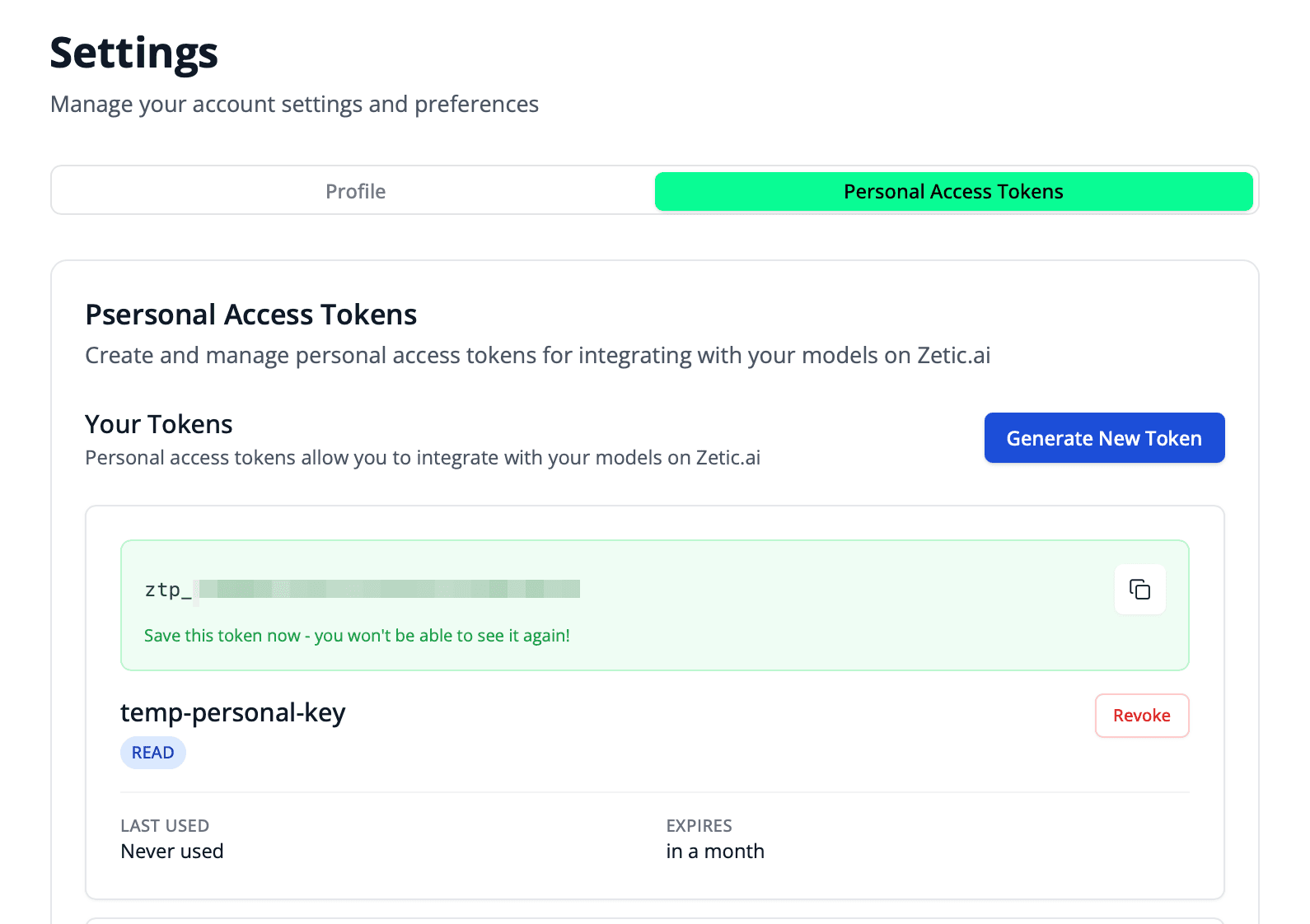

2. Get Your Deployment Keys

Once optimized, specific keys are provisioned for your project.

- Model Key: The unique identifier for your hardware-accelerated binary.

- Personal Key: Your secure credential for on-device authentication.

For comprehensive details on key provisioning and security policies, please consult:

EZ Tip: You don't have to remember the keys

The Melange Dashboard provides ready-to-use source code with your keys already pre-filled. You can simply copy and paste it directly into your project!

Integrate SDK & Run Inference

Initialize the ZeticMLangeModel with your keys to trigger hardware-accelerated execution.

// Zero-copy NPU Inference

val model = ZeticMLangeModel(CONTEXT, "YOUR_PERSONAL_KEY", "YOUR_MODEL_NAME")

model.run(YOUR_INPUT_TENSORS) // Zero-copy NPU Inference

let model = try ZeticMLangeModel("YOUR_PERSONAL_KEY", "YOUR_MODEL_NAME")

model.run(YOUR_INPUT_TENSORS)Explore Documentation

What is Melange?

Learn about Melange and its key features

How it Works

Understand the workflow and architecture

Model Profiling

Global device benchmark and optimization

Examples

Explore real-world implementation examples

Need Help?

Since we are developing rapidly, please contact ZETIC.ai for any kind of issues or questions.